AI is something we are all going to need to learn to deal with

I recently set up a segregated server for AI-based experimentation on behalf of a client. After messing around with the available AI options in April of 2023 I have a few comments:

I’m not going to say this technology is a “game changer,” but it has some serious potential. And there are some serious risks looking forward. Right now I think there is a gap between what people think this technology is and what it actually is, and there are some significant advantages for organizations who embrace this technology and learn how to harness AI’s advantages before their competitors do.

So, here’s your heads-up on what’s out there.

AI-generated Web Page Illustrations

Photos are trivial in the grand scheme of things, but I think the advances in AI-based image generation are a really visual way to understand the sorts of changes we’re looking at with AI. I also think this is an easier way to explain what “AI” is doing in the background, whether it’s with images, or text, or something else.

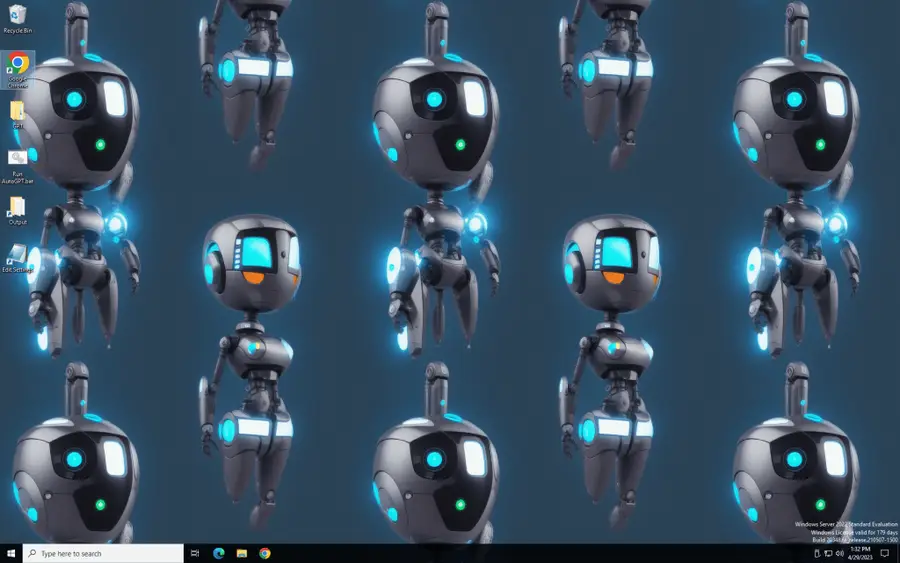

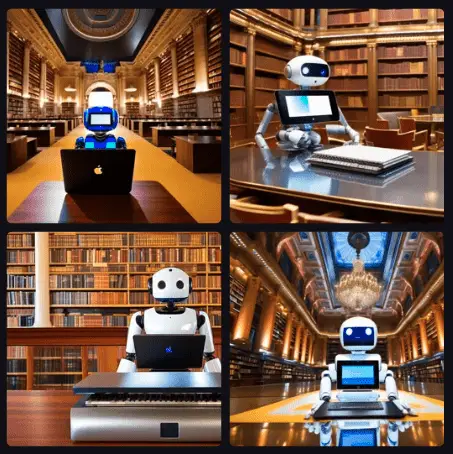

I just fired up a free application powered by something called Stable Diffusion that’s an image generator. On my lowly obsolete graphics card (a GTX 2060) I got the following images in about 60 seconds. I picked my favorite and it’s the one you see at the top of this page.

These images were generated in response to the prompt A futuristic robot typing on a laptop, sitting in an opulent library, helpful, intelligent. I could spend time crafting the image and getting exactly what I want, but for the purpose of illustration there’s no point putting more effort into it. I spent more time making the bottom-right image the featured image for this post than I did creating it, and that’s saying something.

This technology is still new, but speaking as someone who used to make his living with a camera: a whole lot of well-paying jobs are about to be in serious jeopardy.

Today, if you can describe what you’re looking for in an illustration (or even in something that looks like a photo) you can get something royalty free in seconds, at no cost other than your time. That’s transformative.

Two more examples: name the holidays:

So how does this work?

The best I can explain it…well, I bogged down here, so I asked ChatGPT to explain it for me. It struggled a bit, but it got closer when I asked it to explain a particular model used in AI art generation:

What that means in this context is that researchers build software that can recognize images. Textual descriptions are tied to each image, and the “steps” in this instance are steps where random noise is added to an image while training the software, so you go in steps from the original image down to something that looks like random noise. The program is trained to remove noise in steps, moving back toward the image described in the prompts.

Now, to generate an image you simply feed it the prompt describing your desired image, and give it an ‘image’ made up of nothing but random pixels, and boom: it’s “creating” art by removing noise in steps, looking in the noise for “signal” that matches the prompt, so it can discover the image you’ve told it exists there.

(Yes this is overly simplified.)

The problem here is that the software isn’t creating, say, “a futuristic cyborg girl in the style of blade-runner as photographed on Portra film using a Leica 50mm f0,95 lens”; rather, it’s giving you an image that it thinks looks like the image you’re describing, hidden in the noise. So it’s not generating new art; rather it’s looking into its database of millions of images for the traits you’re describing and trying to find something that matches those traits after it removes dozens of levels of random noise, and getting as close as it can to what it’s guessing is the original image that it’s never seen before.

Which doesn’t matter at all if your business is drawing covers for science fiction novels. This is the result of the prompt listed in the previous paragraph. Note that as far as I’m aware this technology is less than 6 months old at this point, and there are lots of experimenters out there.

Why this is an issue

GPT, for lack of a better word, lies. And it lies convincingly.

The tweet above is similar to many you can find on Twitter. You ask ChatGPT (or other virtual assistant models) a question and you assume it’s answering it for you. It’s not. What it’s really doing is telling you what it thinks an answer to your query would look like. And sometimes that means finding a well-respected author and creating a source citation for a journal article that was never published.

Not because the AI is lying in that sense, but because it thinks an accurate response to your question would look like that, including a quote from that caliber of author in that quality of journal, around that date.

And if you don’t double check behind it, you’ll look like an idiot.

It’s not malicious – it’s just doing something behind the scenes that’s different from what it looks like it’s doing.

With that said…

ChatGPT

I asked ChatGPT what it was, and it answered that question well:

That hints at some of its limitations:

- It’s not aware of anything that’s not in its dataset, and that dataset ended in 2021.

- It’s censored. Things like “hate speech” aren’t allowed, which means that as it’s coded it will refuse to write positive prose about Donald Trump, but it will be effusive about Joe Biden. The same goes for any remotely political topic: the inherent bias of the system matches that of mainstream news, or modern universities. This makes sense from a business and political perspective, but it makes it hard to find some facts unless you’re willing to spend the time to creatively work around the filters.

- Complex tasks can be difficult – it can’t learn new information in order to solve a new task.

Auto-GPT

Auto-GPT is an open-source program that depends on the same back-end that Chat-GPT uses, so you need a paid OpenAI account to get access to the API. The differences that are worth noting here are as follows:

- It is capable of finding new information. Auto-GPT will query Google for information, and is capable of browsing web sites in search of the information it thinks it needs.

- It figures out how to solve problems itself. You tell it what its goals are, and it creates sub-tasks to accomplish those goals and spawns new AIs to accomplish those tasks. The more information it gathers, the more detailed sub-tasks it creates in order to hone in on its problem.

- It can remember. Not perfectly, but it can compile information, then seek more, and remember past queries. Sometimes, at least.

- You can feed it custom datasets. If you’ve got information you want it to analyze, you can place them in the folder it’s allowed to read/write from and have it analyze the data. This can be more complex than simple use of the program (you may need to manually read the text data into memory for it), but this is an evolving experiment after all.

- It can write to your local machine and create files. This is good if you’re asking it to do research and record it. It can be bad if it thinks it needs to install and execute new software in order to solve its problem. (Yes, I’ve killed a process after it was git cloneing the software project I asked it to analyze. Sometimes it will try to install copies of itself.

- It’s extensible. For instance, you can have it post directly to your Twitter feed if you’re daring enough.

A Quick note on verions

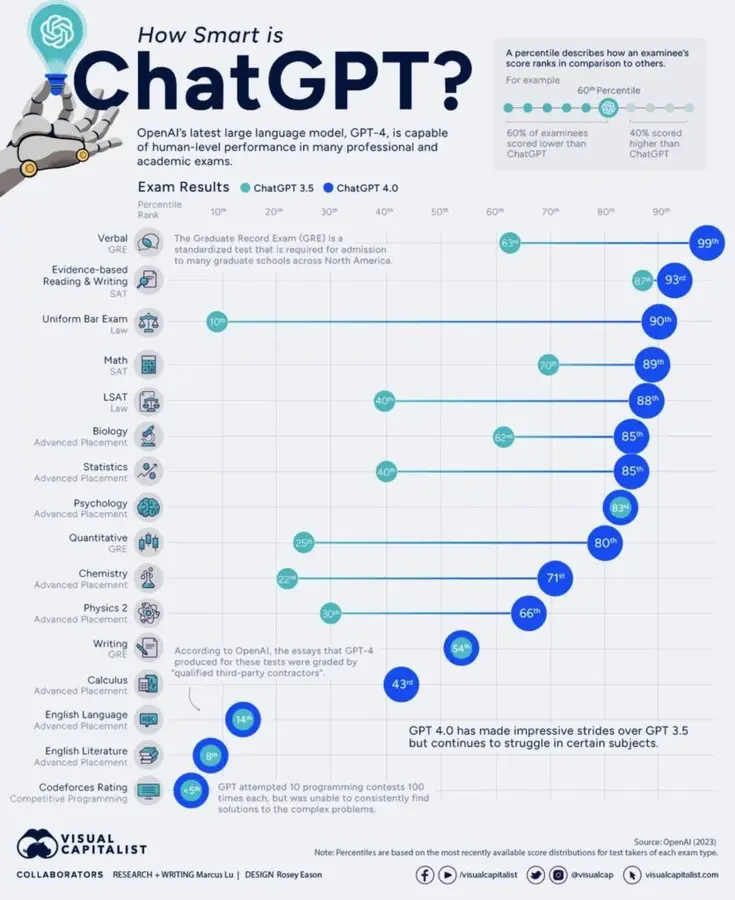

So far I only have access to GPT 3.5 and am in the wait list for GPT4 access. Everything I’ve done so far is in GPT 3.5, and it looks like GPT 4 is a notable improvement. This will only get better over time:

That may be hard to read. The cyan dots are GPT 3.5’s performance on various tests, and the blue are GPT 4. So GPT 3.5 scored in the 62nd percentile on the AP Biology exam, where GPT 4 scored in the 85th percentile.

See why this is important? For $20 per month anyone can have access to a chatbot that can score in the 90th percentile on the bar exam, and scored in the 93rd percentile for evidence-based writing. That’s world changing. If you understand how to use it, and you understand and work around its limitations.

Anyway, back to AutoGPT

My Installation

The environment I created is a Windows server, locked down pretty tightly, accessible over a VPN. Users connect via Remote Desktop, and they see something fairly similar to their normal desktop, with a background to remind them that this is the AI testing server:

Auto-GPT can be complicated to set up, so I’ve done the install and all the user needs to do in order to start the program is double-click a batch file. Settings can be edited by double-clicking a link that’s on the desktop, and output from the software is delivered to a folder that’s also linked from the desktop. This is as ‘point and click’ as I can make the software, but it’s still text-based. Each user has their own independent installation.

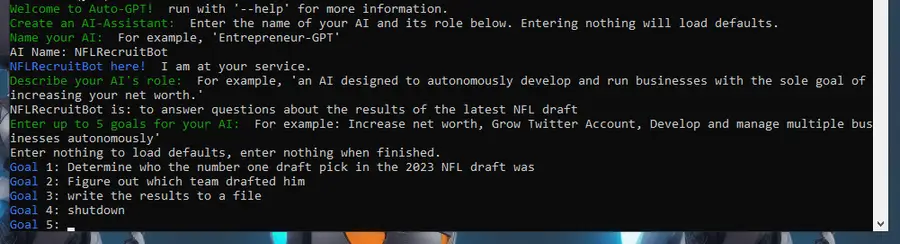

When you run the software, it starts by asking if you would like to continue the previous session. The answer is mostly “no.” This starts a new session, and you get to tell the software the name of the AI assistant you’re creating, its purpose, and give it up to five goals to accomplish.

In this case, we asked the software to figure out who was the number one pick in the NFL draft a couple of nights ago, along with the name of the team that drafted him.

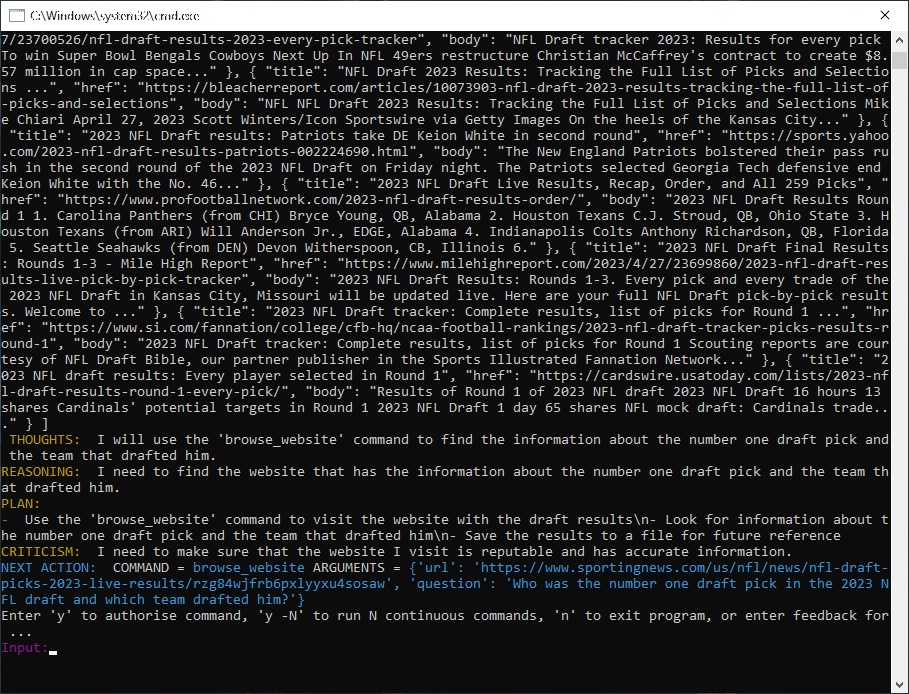

Let’s run it:

I approved the google search.

Note that since every action has an associated cost for using the API, you will be asked to approve every step before it takes place. You can say “y -15” to approve the next 15 steps if you like, but the last time I did that the software started to try and install a copy of itself and its competitor that I’d asked it to compare itself to, so it makes sense to use this in a locked down virtualized environment, and to keep an eye on it.

Also entirely appropriate. I gave it permission, and it fired up a Chrome window and analyzed it.

From here, the bot:

- Queried Google again to find a different source

- Visited the NY Times this time

- Asked for permission to write to a file

- Shut down

More Complex Tasks

That task was fairly trivial, but people are using it for much more complex tasks:

- Research on competitors.

- Investment research

- SEO optimization – point it to your web site and have it make recommendations on how to rank higher in google.

- Finding which topics seem to do well on (name your social media platform) and make recommendations for 10 articles it thinks would perform well.

- Have it actually write said articles. Then tweet links to them.

There’s not really a limit to what sorts of tasks you can attempt, but there are limits to what it’s capable of:

- It may get stuck in an endless loop of visiting a site, reading it, writing the notes to file, re-googling the topic, and going back to the same site. For the 5th time.

- It can get basic things like math wrong. You need to double-check your answers here.

- It can try to install software you didn’t ask for. If it fails to do so (say because of a security control you have in place) it may google how to circumvent the control. Essentially trying to hack your system in order to perform the task you gave it.

- It can get hyper-focused on non-essential things. I watched a guy who sounded German live-stream his experiments with Auto-GPT looking at soon-to-be-published scientific articles trying to learn new facts about viruses that affect the respiratory system, then tweet these out. Sometimes it decided it needed to do a deep dive on related, but not essential information – certain proteins, viruses that affect other systems, etc.

This is still very much an experiment. It’s useful for some tasks, and will likely become more useful once I get access to the GPT-4 model. But it’s still a new thing, and we’re not really sure what it is, or what it’s good for.

With that said, the potential here is too huge to ignore. AI will likely effect big parts of our lives, and this is something forward-looking people will invest in understanding early.

After hitting “publish” here I checked Twitter and found the tweet above.

That’s using OpenBB (which is an open-source Bloomberg Terminal workalike) with Auto-GPT to perform real-world analysis.

We’re still in the early days, but this is exciting.